Optimizing Image Processing

Improving image quality can be time-consuming and it can be difficult to choose the correct filters and operations required for a particular application. Below are some recommendations that can help you to optimize an image processing workflow:

Prior to initiating post-processing routines, you should evaluate images with regard to their general characteristics, including noise, blur, background intensity variations, and the general pixel value distribution or histogram profile. Attention should also be given to shadowed regions to determine how much detail is present, as well as to bright features and areas of intermediate pixel intensity. See Profiling Intensity and Viewing Region Histograms for information about examining intensity profiles and distributions.

In some cases, it is best to remove unneeded parts of your data prior to post-processing (see Cropping Datasets).

It is always good practice to adjust a processing workflow and optimize settings on a limited subset of the data first, using the visual feedback provided in the preview window (see Previewing Operations). Some filtering operations may have variable effects and performance depending on input image(s), filter settings, and the characteristics encoded in the structuring element. Also verify throughout the stack on representative slices. See Image Filters and Settings for information about usage and filter settings.

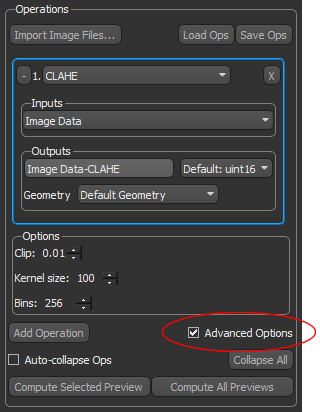

The Advanced Options for image processing let you process multi-scale images in selected operations, as well as select different geometries and data types for your image processing outputs. Multi-scale images are images with different geometries and/or image spacing.

To enable the Advanced Options, check Advanced Options in the Operations box, as shown below.

For best results, you should be aware of the following:

- Best results are usually obtained when the image spacing of all inputs and the selected output are the same (see Dataset Properties for information about viewing and modifying image spacing).

- Poor results may result if the point of origin (position in space) is not the same or is not an even factor for all inputs and the selected output (see Advanced Dataset Properties for information about changing a dataset's position in space).

- The point of origin (position in space) will determine where pixel values are written. If your image data was prepared by cropping, the position in space will be relative to the original data.

Per slice 2D interpretation is generally significantly faster than 3D processing and may give similar results. When setting interpretation to 3D, the filter is applied to the 3D slab with a depth depending on encoded parameters.

An important issue that arises within kernel-based image filtering centers on the fact that the convolution kernel will extend beyond the borders of the image when it is applied to border pixels. A common approach to dealing with border effects is to pad the original image with extra rows and columns based on the filter size. The technique used in Dragonfly to remedy border effects is to reflect the image at the borders. For example, column[-1] = column [1], column [-2] = column [2], and so on.

For more information, go to http://homepages.inf.ed.ac.uk/rbf/HIPR2/convolve.htm and http://homepages.inf.ed.ac.uk/rbf/HIPR2/kernel.htm.